A goodness‐of‐fit approach to inference procedures for the kappa statistic: Confidence interval construction, significance‐testing and sample size estimation - Kraemer - 1994 - Statistics in Medicine - Wiley Online Library

Percent Agreement, Kappa Coefficient, and 95% Confidence Interval for... | Download Scientific Diagram

Weighted kappa coefficients and 95% confidence intervals of accordance... | Download Scientific Diagram

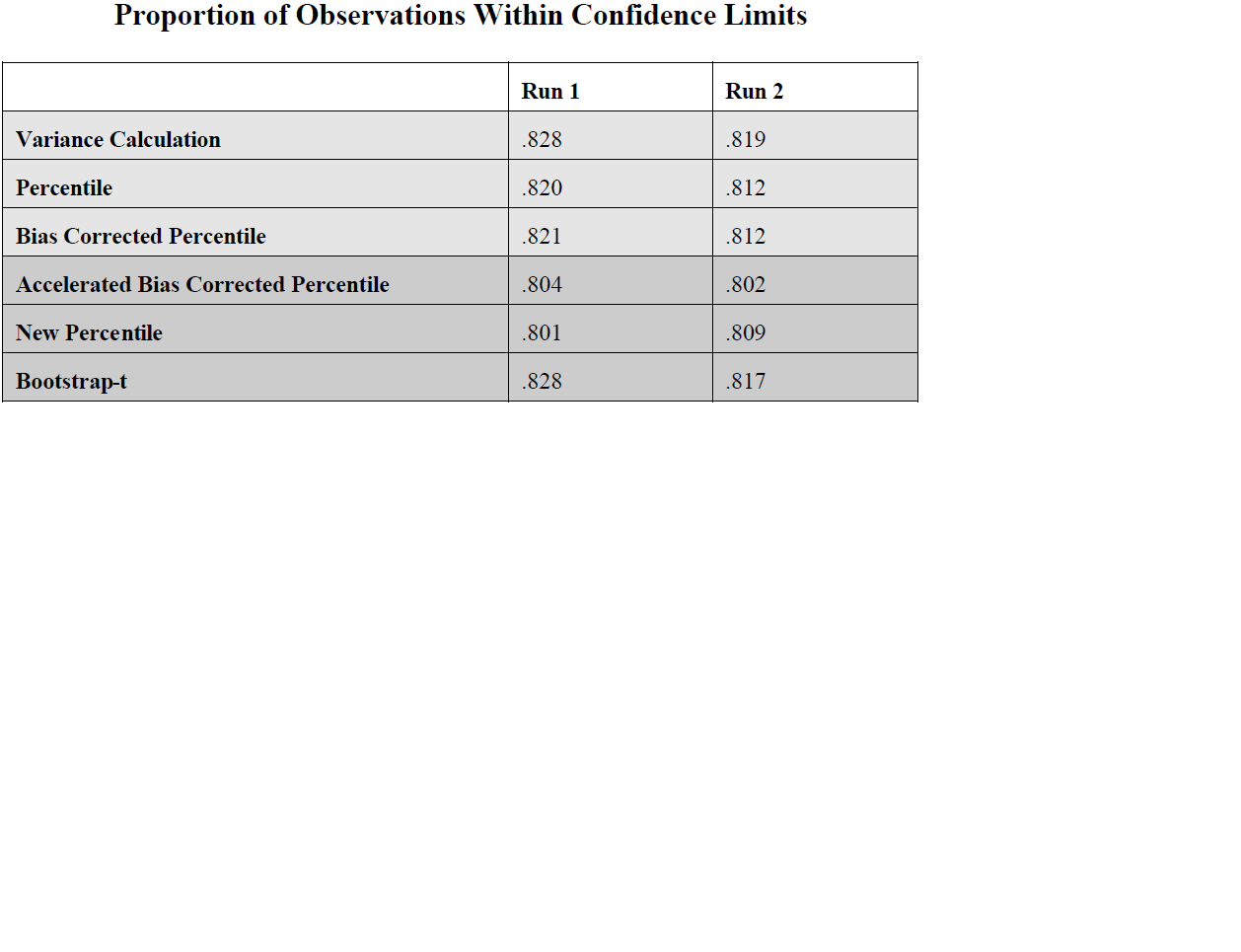

Comparison of Bootstrap Confidence Interval Methods in a Real Application: The Kappa Statistic in Industry

Macro for Calculating Bootstrapped Confidence Intervals About a Kappa Coefficient | Semantic Scholar

Table 3 from Sample Size Requirements for Interval Estimation of the Kappa Statistic for Interobserver Agreement Studies with a Binary Outcome and Multiple Raters | Semantic Scholar

GitHub - IBMPredictiveAnalytics/STATS_WEIGHTED_KAPPA: Weighted Kappa Statistic Using Linear or Quadratic Weights

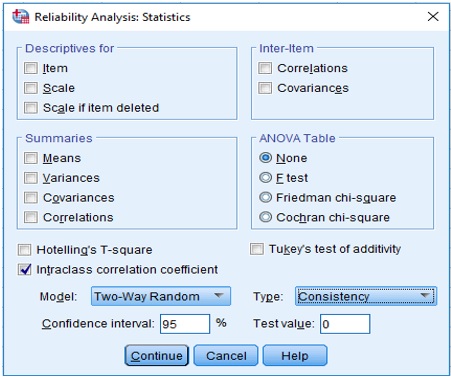

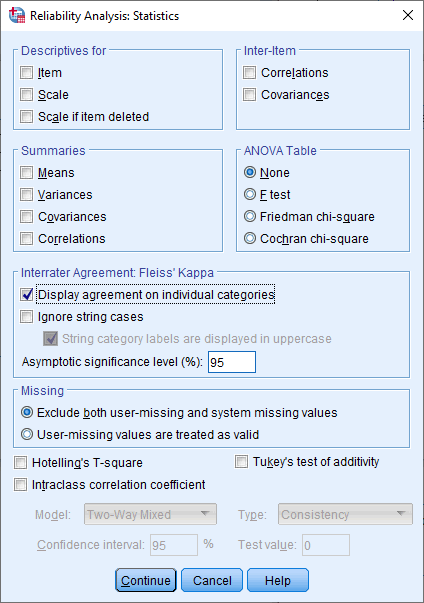

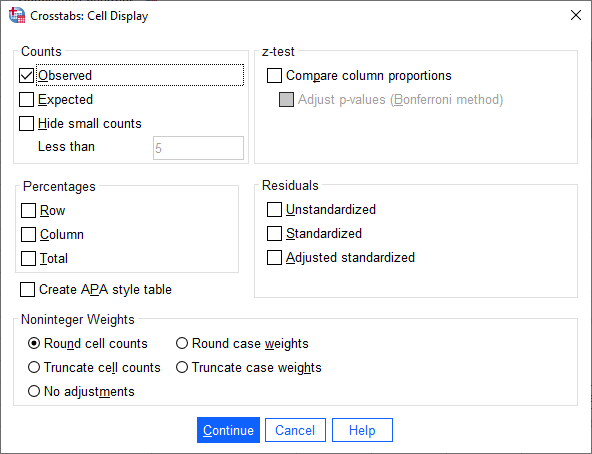

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics