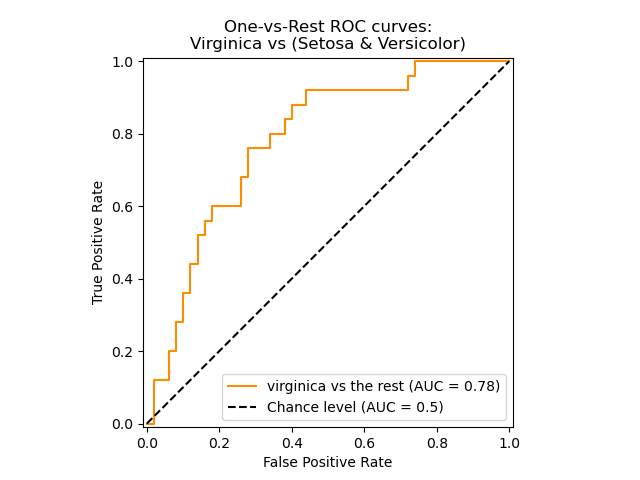

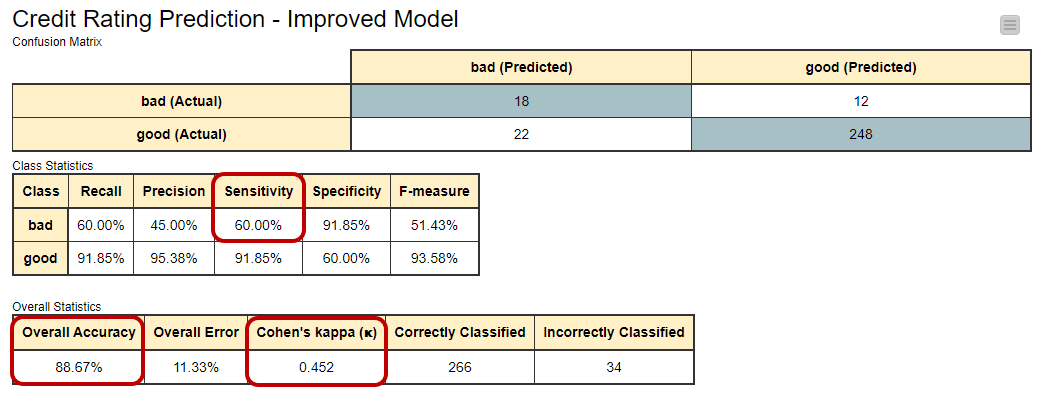

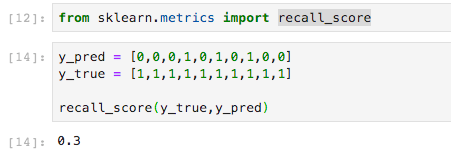

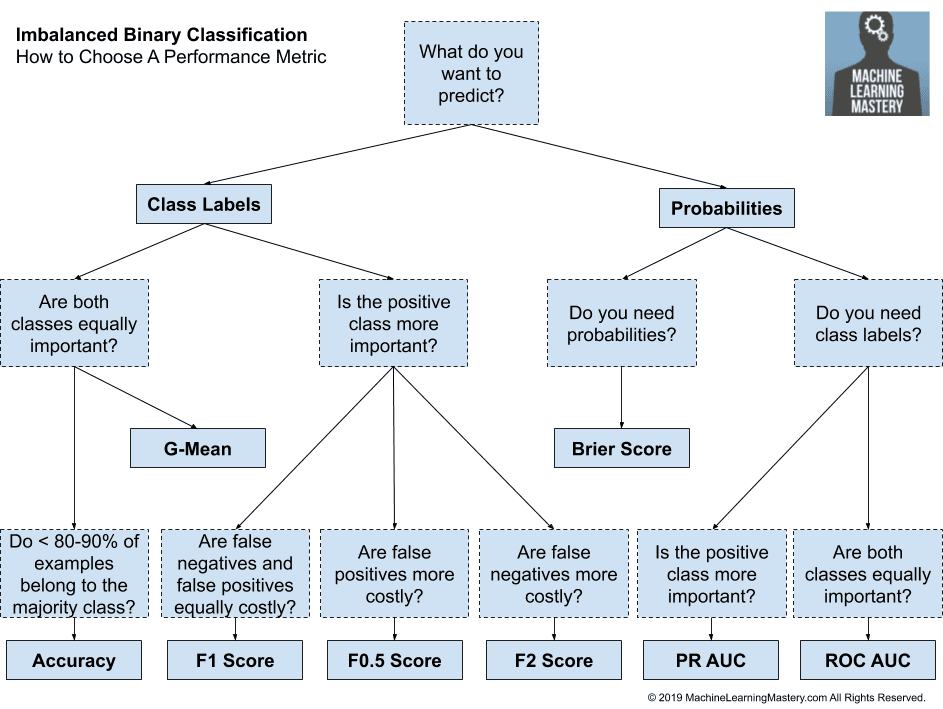

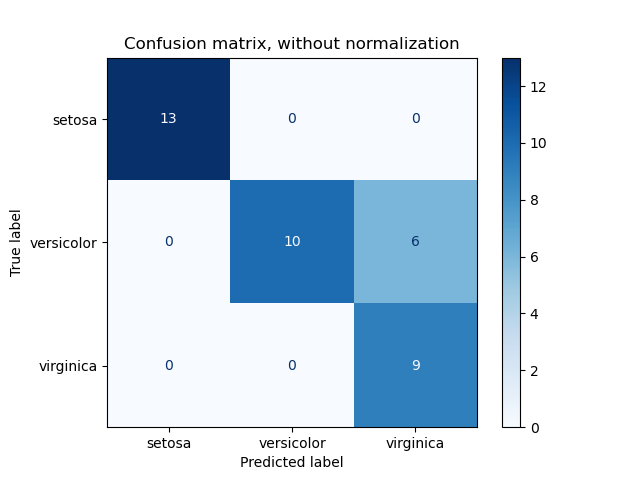

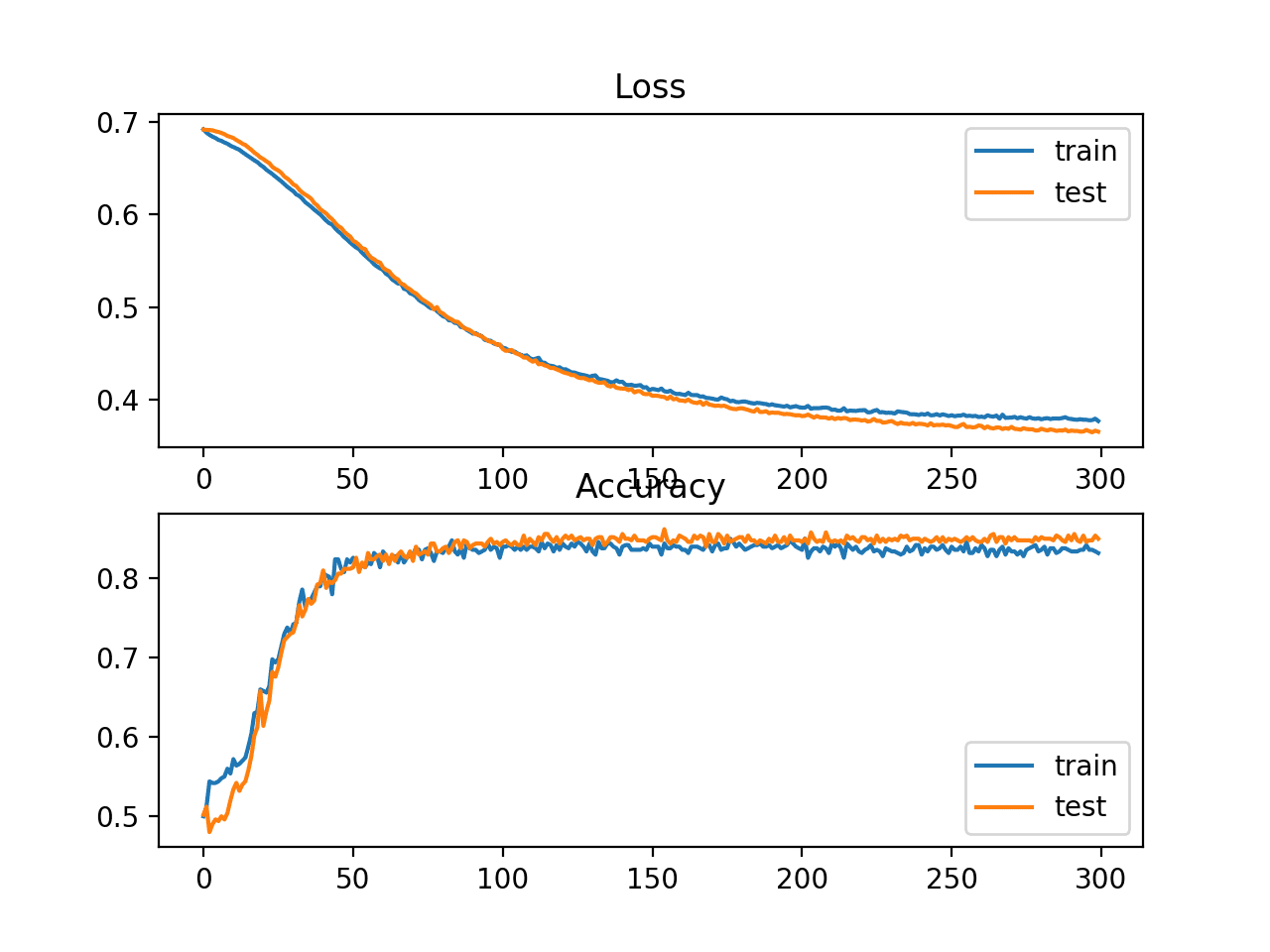

How to Calculate Precision, Recall, F1, and More for Deep Learning Models - MachineLearningMastery.com

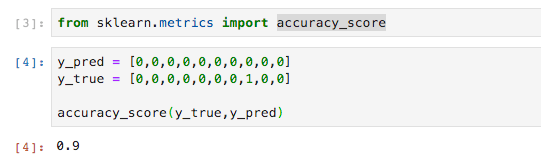

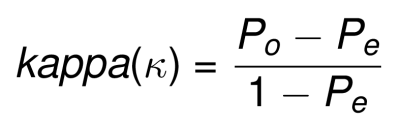

Adding Fleiss's kappa in the classification metrics? · Issue #7538 · scikit-learn/scikit-learn · GitHub

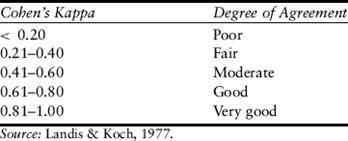

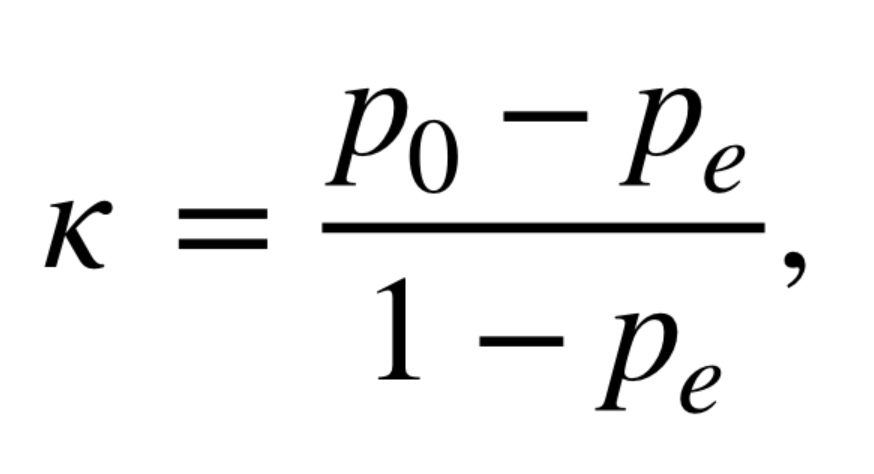

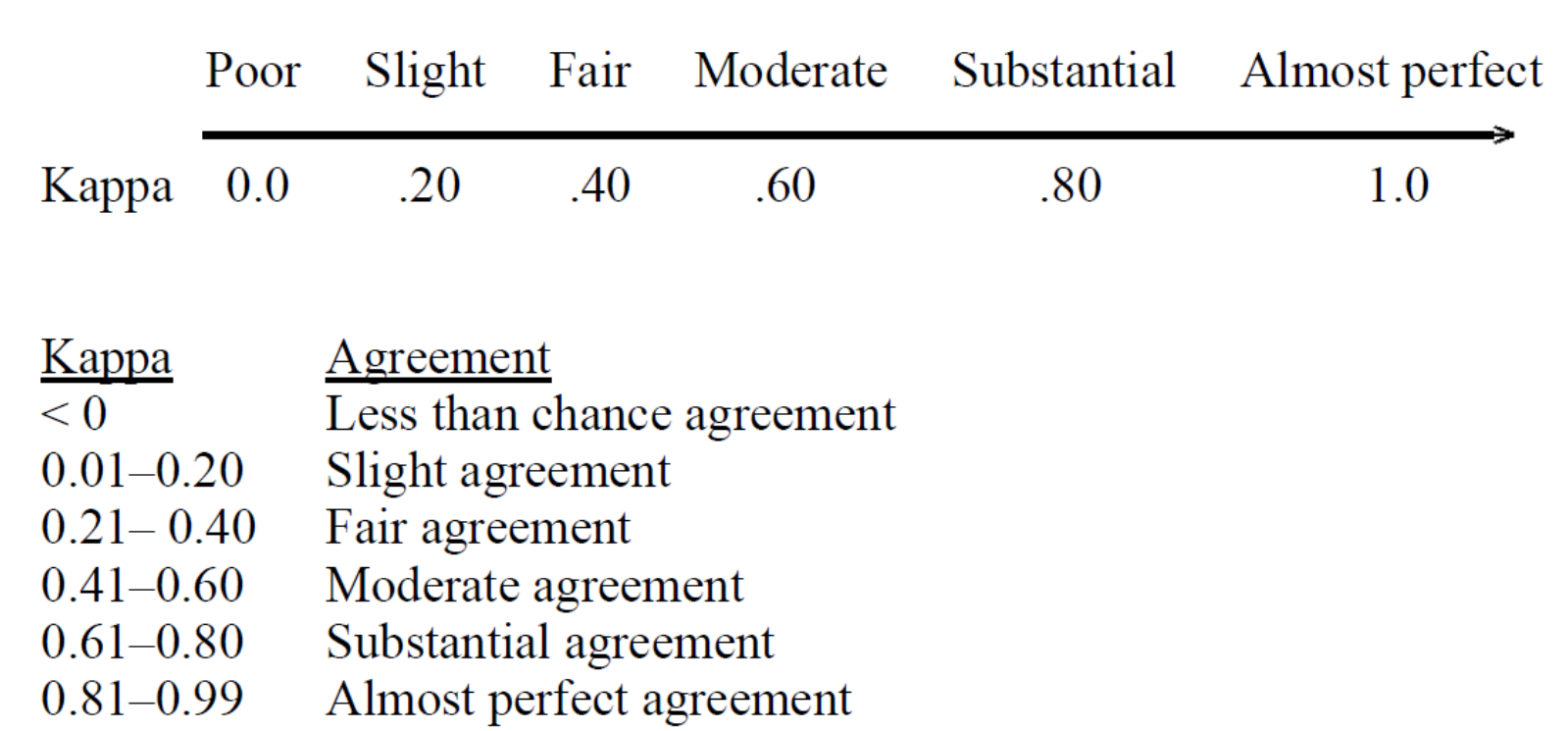

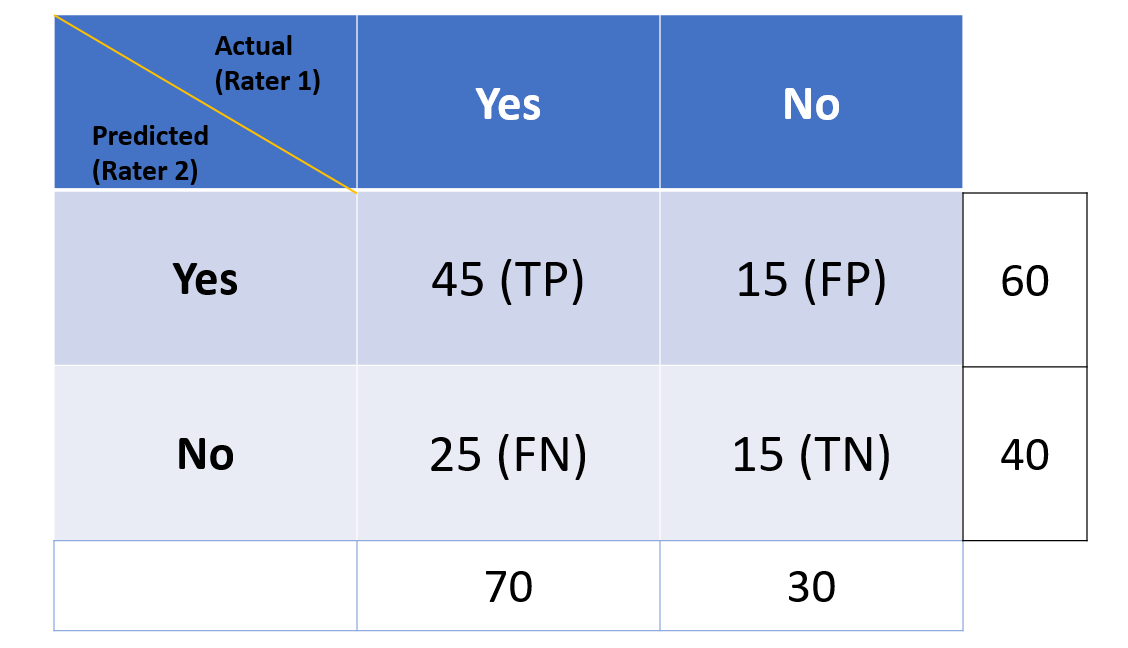

Inter-rater agreement Kappas. a.k.a. inter-rater reliability or… | by Amir Ziai | Towards Data Science

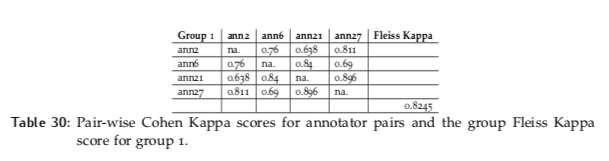

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science